Posted

In this blog, I continue the thoughts and insights from my previous blog about FAIRCon 2023. Making Cyber Risk Quantification (CRQ) more actionable and showing value is vital to the success of your risk management program. Another way to make CRQ more actionable is to leverage it to help prioritize vulnerabilities. During FAIRCON, there was a talk about trying to do this (Case Study: Patch Prioritization with FAIRCAM by Denny Wan, John Linford, & Sasha Romanosky).

During this talk, I appreciated that the group said they hadn’t quite figured this out yet. They were trying to map EPSS (Exploit Prediction Scoring System) to Factor Analysis of Information Risk Controls Analytics Model (FAIR-CAM). The challenge they were running into, just like FAIR-CAM, was that it was hard and not straightforward. I admit I am nowhere near an expert in understanding the true impact of vulnerabilities, but I have been doing risk quantification for over eight years. People have been trying to figure out how to leverage FAIR to measure the impact of vulnerabilities for many years. The same concerns mentioned in my previous blog also apply to this situation. And more specifically, how do we justify which vulnerability to patch first?

This question brought up a couple of other questions I will attempt to address:

- Why does prioritizing vulnerabilities matter?

- How can this be done better than how it’s currently being done?

- Why does this matter since vulnerability scanners like Tenable, Rapid7, and Qualys all have rating scales?

Don’t get me wrong, the vulnerability scanners previously mentioned and others are excellent and very much-needed tools in your security toolbelt. Their rating scales are even a step in the right direction in order to prioritize vulnerabilities. The challenge is when you have a rating scale based on criticality like Severe, High, Medium, Low or one that provides a score like CVSS or EPSS alone, it’s hard to get the business to buy into why it’s so critical because they don’t understand these industry scoring systems and qualitative rating scales mean nothing to them (e.g., how much more risk is there between high and medium?). Especially when there are hundreds (if not thousands or more) “critical” vulnerabilities that need to be remediated, it’s a constant challenge to determine which ones to patch first and convey that to system and application owners to get their buy-in. What I mean is that if everything is important, nothing is. There is a need for the data to be brought into risk terms – dollars and cents. Doing this shouldn’t be difficult. Additionally, I am NOT saying ditch those rating scales. I am saying augment those scales with risk exposure. This way, you can truly find out what is important and critical to the business operations, not just critical from a security perspective. It should help you find that right balance.

How can this be done?

First, let me address how this is done with FAIR. In a FAIR analysis, in order to determine the amount of exposure you have to a particular vulnerability, the risk practitioner must first understand the vulnerability, how it could be (or is being) exploited, and determine the impact, e.g., where it will affect the loss flow. In other words, could the exploitation of the vulnerability cause a data breach or outage? This usually requires additional conversations with their threat intel and vulnerability management teams. Then, the risk practitioner must determine how the control environment will affect the success or failure of that actor abusing that vulnerability. This may require additional conversations with the control owners or other subject matter experts. This takes time to coordinate and get answers, which is bad, especially when the vulnerability is already being exploited by attackers.

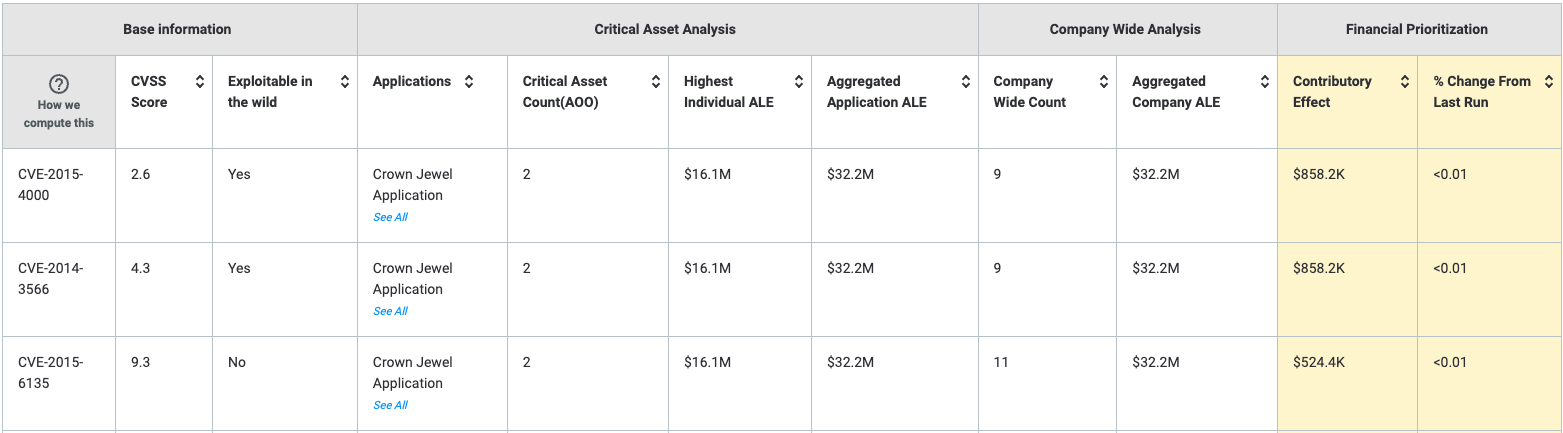

Now, the easy button: if an organization connects its vulnerability scanner to the ThreatConnect Risk Quantifier (RQ) platform, it can add the risk exposure, in financial terms like dollars, to each vulnerability.

Explore ThreatConnect’s Risk Quantifier with our interactive demo here!

RQ accomplishes this by assessing your risks from the attacker behavior perspective using MITRE ATT&CK. RQ analyzes attack patterns to determine what can/can’t be done. This step is important because exploiting a vulnerability is only one way for an attacker to continue on their path, not the only method they will try. The platform automatically analyzes the attack path, making it easier for the risk practitioner. They do not have to guess where a particular vulnerability will affect the model. Through RQ, we can automatically determine the amount of exposure each individual CVE contributes to your environment. Having this information can enable an organization to improve its vulnerability management program by prioritizing (and defending recommendations) which vulnerabilities should be prioritized. This helps solve the age-old problem of determining which critical vulnerability is more critical than another, all things being equal. This approach goes beyond what the FAIR model was designed to help accomplish, simply put, high-level scenario analysis, which I surmise is why people struggle to “quantify the risk associated with vulnerabilities.”

You can learn more about ThreatConnect’s Risk Quantifier here. For more specific information on how we can apply exposure to your vulnerabilities, schedule a demo of the RQ platform with one of our CRQ experts.