Posted

As more companies look to quantify cyber risk in financial terms, a common question we hear all the time is, “which model (or approach) should I use?.” I saw an interesting quote from a Gartner® research note titled “Drive Business Action with Cyber Risk Quantification “ that spoke about where the CRQ space is going:

“Nearly 70% of SRM leaders are planning to deploy CRQ, based on statistical modeling and machine learning (ML) techniques during the next two years “

There are a lot of CRQ models out there, from FAIR to ones using machine learning. Yet a common critique of these non-FAIR models is that they’re not “open.” FAIR is an “open” model – it uses manual inputs combined with Monte-Carlo simulations and BETA Pert distributions. The approach has been around for years, and if you want, you can build your own FAIR implementation in Excel (we have – let me know if you’d like a copy).

But does being an open model mean it’s right or good?

Using FAIR requires significant manual inputs, and they highly influence the output – and therein lies the challenge. The challenge isn’t to the model (i.e., Monte Carlo simulation) – it’s to what you put into FAIR to get defensible outputs.

I had a BISO who had used FAIR internally tell me that she didn’t want to have to answer the question of “where did you get your inputs that drove the output” with “I entered them” as that wasn’t a defensible answer (her paraphrased words). And therein lies a key distinction in the “open” model critique – while the math is “open,” the inputs are not. And the inputs are the hard part.

I say this because, while a lot of companies know FAIR, using it is a challenge. FAIR isn’t the answer to everything – it’s a tool in the risk manager’s tool belt. There are other models that work very well to quantify cyber risk.

Our approach at ThreatConnect is – and will be – to support FAIR. We let our users choose between running FAIR, Semi-Automated FAIR (with pre-populated loss data and automated Vulnerability calculations), and ML-based scenarios depending on their needs and the data available. And most users choose the latter two approaches as they provide more actionable results, faster and more defensibly.

So how do we make sure our models are “open” and good? Our product uses statistical, regression, and machine learning models that are – in and of themselves – “open.” We spend our time working with data to automate the inputs to the models (which, of course are tunable) to make sure our outputs are good – and that’s where the real challenge lies.

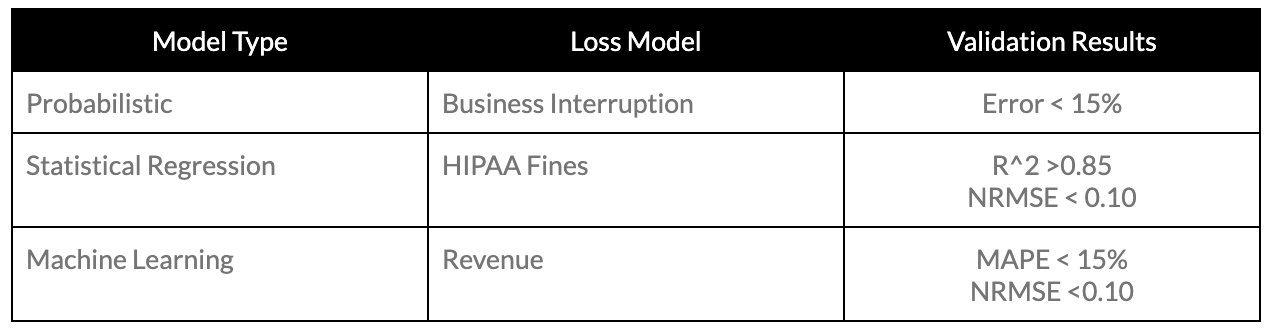

How do we know our models are good? We validate and back-test them. And we’re open about that as we put them in our product for users to review. See below for an example from some of our model validation results

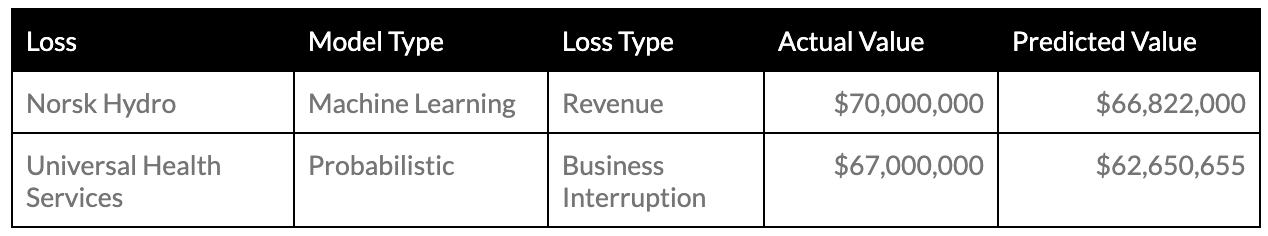

Now those numbers might not mean anything to you – but they do to our data science team. And they’ve back-tested those models with real-world output – see below

The data above – the validation and backtesting information – is how a model should be measured.

We’ve also innovated on the technical side too (the Vulnerability part of the FAIR taxonomy) as CRQ isn’t just about the financial information. Financial losses to cyber attacks are tied to the defenses that the attacker beats. Only by combining attack, defense, and loss data do you get a full picture of cyber risk.

The core “model” for our attack path modeling is “open” (Markov chains with Monte Carlo simulations with some patent-pending calculations, which are shown in the product), but that isn’t the whole story. The whole story is, where do you get the data to feed those models?

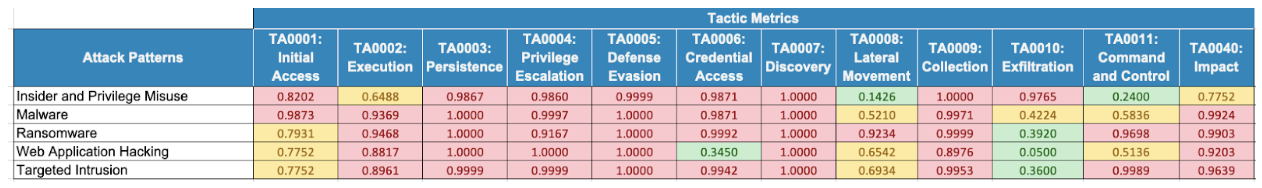

Our data comes from a variety of places, including inside your organization. We gather a picture of your attack surface (using data from CMDBs, vulnerability scanners, and control measurements like NIST CSF, ISO 27001, and CIS to name a few), and we measure how effective attacks against your defenses can be by categorizing them against the MITRE ATT&CK framework. Each attack that RQ simulates is also categorized against the ATT&CK framework and using this mechanism, the attack and the defense can be reconciled against each other, providing a means to understand the defense in the context of these attacks.

And the model we use is open – see below for an example of attack results against a given configuration.

There’s a lot more that can be said about CRQ models, where the space is going, and the kinds of conversations that we’ll have. Personally, I don’t think there will be one model to “rule them all.” Rather I think models will be applied by customers as appropriate. We have customers who use FAIR for some risk scenarios, Semi-Automated FAIR for others, and the ML-based models for others. Why? Because the goal for CRQ is to provide actionable answers to tough questions at speed and at scale.

Our belief is that we should be looking at how good a model is by validating and back-testing the models and doing so in an open, transparent, and data-driven manner. That’s our approach, and you’ll hear more from us as we continue to evolve and improve the CRQ space.

If you’d like to learn more, please reach out to sales@threatconnect.com or /request-a-demo/. We’re happy to help and answer any questions you might have!

Quote Attribution: Gartner, “Drive Business Action with Cyber risk Quantification, Cybersecurity Research Team, 21 March 2022. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.