Posted

It’s been almost a year since the National Institute of Standards and Technology (NIST) issued its internal report on “Integrating Cybersecurity and Enterprise Risk Management (ERM).” I thought it was time to take another look at it and share what I think are the most interesting conclusions.

First: CRQ Isn’t Done Well

The following line in the Executive Summary was fascinating:

“However, most enterprises do not communicate their cybersecurity risk guidance or risk responses in consistent, repeatable ways. Methods such as quantifying cybersecurity risk in dollars and aggregating cybersecurity risks are largely ad hoc and are sometimes not performed with the same rigor as methods for quantifying other types of risk within the enterprise.”

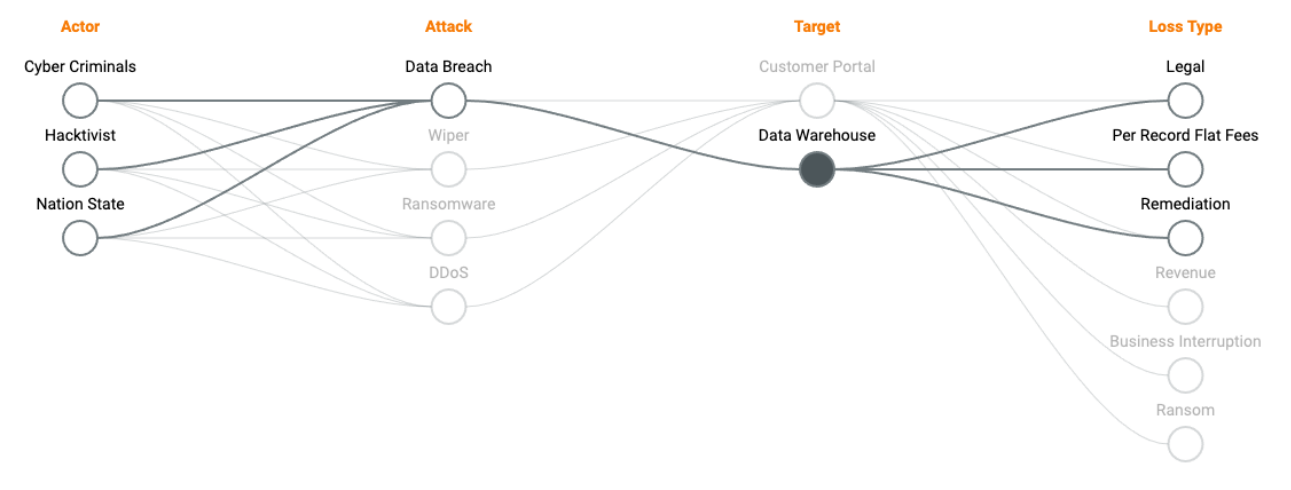

I couldn’t agree more but actually think part of the problem here is that people hear the term “cyber risk” and think there’s 1 thing called “cyber risk.” The reality is that your “cyber risk” is dependent on your technical risk profile (the actor/attack you’re facing) matched up to the defenses you have in place. When a successful attack happens you get a loss. That relationship (mathematically and logically) is at the core of how we set up RQ to compute financial loss (we call that relationship an impact vector).

That relationship is why we believe you should always be using reporting an SLE (representing the total loss), ALE (representing the annualized loss), and the likelihood of success (chance the attacker beats your defense) factor together when reporting cyber risk. Communicating just one (or 2) pieces of that puzzle leads to confusion and presents an incorrect view of the risk.

Second: How Do We Do This For Our Company?

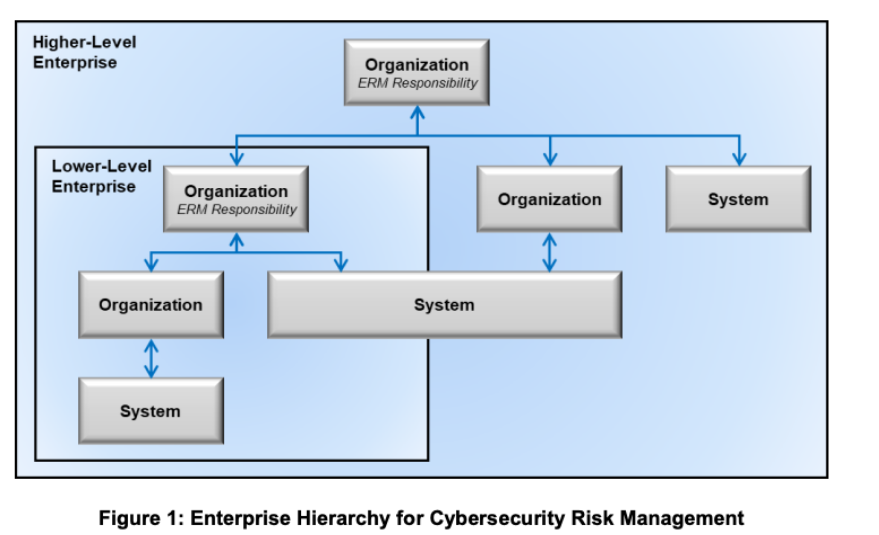

I know a question we get often is “what would this look like” or “how would I start”? I love this picture in the NIST guidance as we created a similar structure in RQ years ago.

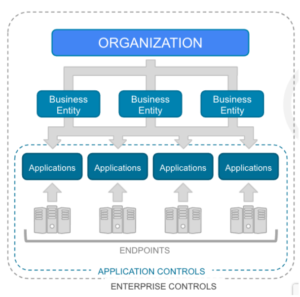

At the end of the day, cyber risk exists at a system (ie technical system, ITIL Service, or Business Application) level. Those systems (or applications) provide value to the  business (through a Business Entity or Business Process) and they become the link between the financial risk and the technical risk. RQ’s conceptual view of an organization is shown below (note I found this pic in a 2019 presentation – way before the NIST report was published).

business (through a Business Entity or Business Process) and they become the link between the financial risk and the technical risk. RQ’s conceptual view of an organization is shown below (note I found this pic in a 2019 presentation – way before the NIST report was published).

This setup works but (as NIST points out in section 2.2.3) also causes problems as teams focus on the individual system (or application level) and miss the organization/enterprise level. We’ve seen that too – hence the reason for the reporting structure shown in the figure above. While cyber risk exists at a system level (something technical has to get hacked) it’s reported up through business channels.

Third: Risk Registers and Assets

Again NIST points out the obvious – namely that “Improving the risk measurement and analysis methods used in CSRM, along  with widely using cybersecurity risk registers, would enhance the quality of the risk information provided to ERM.”

with widely using cybersecurity risk registers, would enhance the quality of the risk information provided to ERM.”

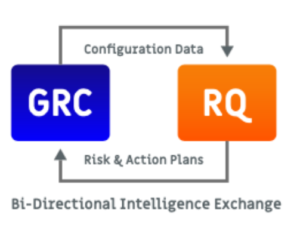

While this is a great statement, it’s not a solution. A solution would be to connect your CRQ tool to your Configuration Management Database (CMDB) and/or GRC tools. Then you could mitigate the challenge of managing assets while also ensuring that data flows bi-directionally from your GRC/CMDB to your CRQ solution (we’re connected to a bunch of those tools).

One Thing I Thought Would Be in The Document

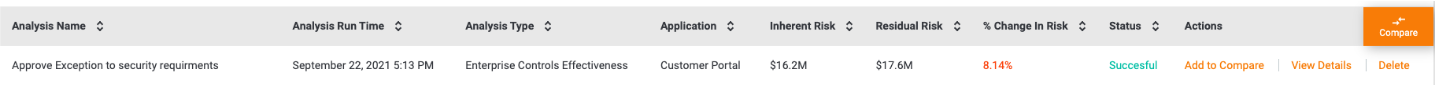

A big use case we see is exception management. What I mean by that is companies have to balance security needs/risks with the needs of the business. If a business owner says “we can’t meet security requirements because of X, Y, Z” typically what happens is they get a waiver (or exception).

That creates a potentially large risk for the company and that needs to be quantified (in financial terms). It’s also why we created the ability to model “what if” scenarios that show the inherent and residual risk of granting those waivers (among other use cases – see below for an example).

One Thing I Loved

So this is a personal one for me – the mention of a Business Impact Analysis (BIA). When I first started in cyber risk quantification (7 or 8 years ago) we built our inputs on top of BIA templates. They worked well to start (we’ve since evolved beyond them) but it was cool to see them in there (and mark this document – no one in this history of the world has linked a “BIA” and “cool” together in a sentence)

Where it Lost Me

Heat maps. They bring up heat maps. I’m not a fan of heat maps. The reason why is simple – they’re very subjective or “pseudo quantitative.” To give you an example, one thing we talk about is prioritizing CVE’s by their financial impact vs. their CVE score. Why? Because if you have two CVE’s that are scored at 9.3 does that mean they’re of equal risk? Are they both “High” or “Red”? Are all “high” and “red” values equal? The answer is of course not – but heat maps can skew the visual in a way that hides the truly impactful risks.

In the end, the NIST document is a good high-level overview of what you should consider doing. My recommendation would be to start doing the things it recommends – as soon as you can. There’s no perfect time to start, there’s no perfect amount of data to start with, and you’ll never be “ready.” It’s time to move the conversation forward from “what we should do” to “how we’re doing it.”