Posted

I’ve always said that IOC’s are like pennies in the currency of intelligence — annoying to deal with, but en masse they can add up to something big. Sometimes you won’t know what you have until you start flipping over the proverbial couch cushions of your organization’s log files, scan results, and so on.

If IOC’s are like pennies, then file hashes are like those odd foreign coins you find that might be worth something totally different based on exchange rates or collector interest. They’re fickle things that sort of defy the laws of how we treat indicators. An adversary can trivially change them, but when you see one that’s known to be bad you can be very confident that it is what you think it is…how often do we have such a blessing in our world?

Since adding support for multiple hash types to the ThreatConnect platform years ago, we’ve noticed that there’s an industry-wide problem when it comes to dealing with file hashes: nobody is using the same currency. Specifically, a lot of us are forced into using different currencies for different parts of our jobs. For example, an intelligence feed may give you an update of known binary samples as SHA256 hashes. However, your EDR product might only accept MD5’s. Heaven save you if your industry partners are communicating using SHA1’s.

Somewhere in the middle, someone is responsible for trying to maintain the exchanges between these currencies. How do you know that MD5 hash is representing the same file as this SHA1? You may be lucky enough to have a data source somewhere in your tech stack that deals in all three hash types. But are there brains in the middle to track that? What happens when two sources disagree with each other? What happens when you learn a new hash that belongs with its buddies, or that your previous mappings were wrong?

Making Heads or Tails of Files

We sought to address these problems with CAL’s latest release. By scrutinizing our existing data sources of hundreds of millions of file hashes, and adding in some new ones, we’ve been able to start untangling the webs our data sources are weaving.

Our users saw a problem as they went about their day-to-day jobs as intel producers and incident responders — and we noticed it too. With everyone exchanging currencies at different rates in different ways, things were getting lost in translation. Context and critical enrichments were diverging. Pertinent intelligence became available to those who need it, but only if they knew to ask about a file by the correct hash. These minor errors seem trivial at first but thanks to CAL’s massive aggregate dataset and analytics horsepower, we were able to show that these “fractions of a penny” errors could potentially add up very quickly.

The look you get when you ask your coworker “what kind of hash do you need?”

These problems were starting to stack up in significant ways for our fellow security nerds. Our users voiced some of the frustrations they face in day-to-day work with files:

- Dealing with alert fatigue and false positives.

- Figuring out which hashes belong together.

- Building an overall enrichment of file IOC’s without relying on a toolbelt of 3rd-party offerings.

Thanks to our new file analytics in CAL 2.9, we’ve been able to unify and “flatten” these disparate datasets to generate a more holistic and accurate picture. On the scale of over a billion indicators, we’ve figured out which hashes belong together, which sources give refuting data, and which ones to trust. We’ve solved the “fractions of a penny” error for you. Even better, these insights and decisions have been simplified so that they are accessible to you via the ThreatConnect platform and Playbooks so that you start benefiting from them right away.

“Well those are whole pennies, right? I’m just talking about fractions of a penny here. But we do it from a much bigger tray and we do it a couple million times.”

-Peter Gibbons, Office Space

Reducing False Positives and Alert Fatigue

This is always one of the biggest problems to address for our users. We use phrases like “boil the ocean” or “garbage in, garbage out” but the reality is that someone somewhere in the loop needs to derive insights from the big picture.

We have a number of analytics that are geared towards identifying things that are patently boring. Why? Because we can leverage features like indicator status to make sure that these indicators aren’t clogging up your SIEM, sensors, and more while still allowing you to memorialize them as needed.

One such example is that CAL ingests the National Software Reference Library (NSRL) published by the National Institute of Standards and Technology (NIST). Simply put, the NSRL primarily contains hashes of “known” software and pertinent files. Think of every “known” binary like Notepad or Registry Editor that ships with Windows, every .DLL file from every version of it, and so on.

The Problem — As you can imagine, the NSRL is massive, tallying over 235 million hashes. This is too much for you to maintain as you’re doing your job: it’s a big data problem, so we have a big data solution (CAL) to take care of that for you. Unfortunately, the NSRL only publishes MD5 and SHA1 hashes. Do you need to use a SHA256 to initiate a sweep across your company’s thousands of endpoints? You’re likely out of luck.

This file (cmd.exe) was listed in NSRL via MD5 and SHA1 hashes, but not SHA256. Let CAL fill in those blanks for you so that your day isn’t filled with false positive alerts on that SHA256.

The Solution — CAL’s analytics have identified where we’ve seen hashes listed together and which sources we can trust with that currency exchange. Those 235 million hashes are really two sets of hashes talking about 117 million files with no “known” SHA256 hash. Of those files, our analytics immediately identified 65 million SHA256 hashes.

Why does this matter — If you’re a shop that needs SHA256’s at all, you just got false positive protection from nearly half of the NSRL dataset without having to lift a finger.

Dealing with Incomplete and Incorrect Hash Mappings

I think we have two types of users: those who have this problem and complain about it every time we talk, and those who have this problem and don’t realize it.

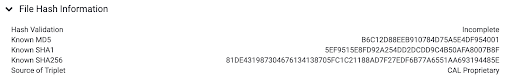

The Problem — We can tell you first-hand that there’s a lot of incomplete and incorrect information regarding which hash goes with which. Putting aside for a moment the demonstrably-possible-but-statistically-insignificant occurrence of MD5 collisions, we were able to scour our datasets to understand which sources provide what flavors of data. We identified three “states” of data:

- Complete triplets are defined as a series of hashes (MD5, SHA1, and SHA256) that correctly belong together. Certain ground-truth sources, such as running the hashing algorithms on the file samples themselves, yield the utmost confidence in this. However we often don’t have the file samples themselves. So when we’re faced with another data source, such as an open source feed that just spews a list of hashes, we can compute a gradient of how trustworthy those different sources are.

- Incomplete triplets occur when we get one or two of the hash types mentioned above. Some data sources simply publish the MD5 and SHA1, as an example. That’s still valuable intelligence, but it doesn’t necessarily help us when you need that file’s SHA256 to conduct an enterprise-wide search and destroy mission.

- Invalid triplets occur when we get refuting information from a less-trusted source. If, for example, we hashed a file sample ourselves and then a feed reported a different SHA256 for the same SHA1, we consider that to be an invalid triplet as it refutes our more highly-trusted source.

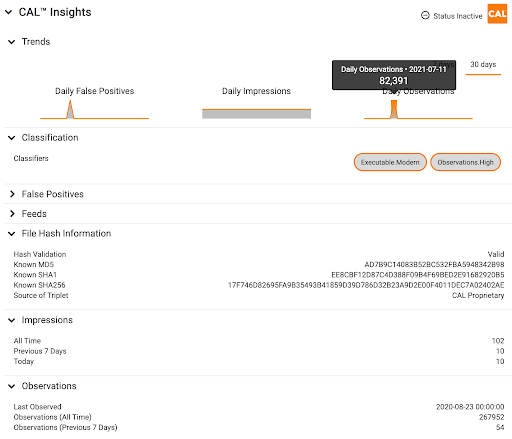

The Solution — When you look at a File indicator in ThreatConnect, you’ll start to notice a new section entitled File Hash Information that looks like this:

This section will outline what our analytics have derived about a File sample’s various hashes:

- Hash Validation speaks to the state of your submitted file hash indicator. For example, if you submitted just one of the three hashes submitted above, CAL would let you know that it is Incomplete. We know the other hashes that belong with it, and would like you to have that information! Note that if you give us multiple hashes together (e.g,. MD5 : SHA1 : SHA256), we’ll choose the most precise one (SHA256) and give you the information we have for that one.

- Known MD5, Known SHA1, and Known SHA256 are there to give you the appropriate hashes (if we have them) based on the “chosen” hash in your submitted indicator.

- Source of Triplet lets you know where we got the information that led us to believe that those are the partner hashes for your submitted indicator. This can be from threat feeds, open sources like the NSRL, or a derivation of multiple sources and the numbers we’ve crunched around them, such as in this case (CAL Proprietary).

Why does this matter — Being able to tell you the complete and correct mapping of additional hash types removes the currency exchange problem entirely. Your analysts, your playbooks, and your entire enterprise can now benefit from CAL’s meticulous work in building and maintaining that library for you.

File Enrichments and Reputation

Armed with these updates, we can start to provide you with the totality of what we know about an indicator and any of its aliases. Who cares if you call it by its SHA1 name or SHA256, the file is the file. If you can match those names together appropriately, it stands to reason that you can (and should) match any of their corresponding intel and enrichments.

The Problem — Part of the problem with fragmented datasets is that you don’t necessarily know what you have. Your industry partners may have given you some malware designations for the MD5’s they traffic in. Your open source feeds may tell you something else about that same file by its SHA1 name. So how are you to simply ask, on the scale of millions of indicators, the obvious question: “what are all the things I know about this and any of its nicknames?”

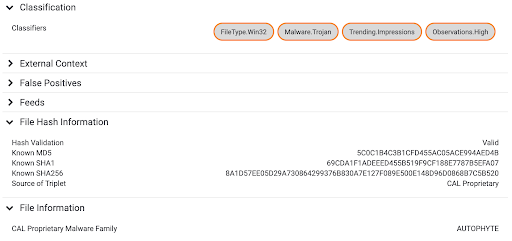

The Solution — When you look at File indicators in ThreatConnect, you’ll start to see more enrichments based on the chosen hash from your query.

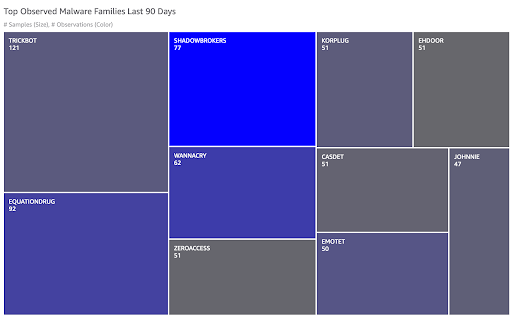

Note the addition of new Classifiers — at a glance you or your Playbooks can determine that the file you’re looking at is a Windows 32-bit binary, and that it’s specifically a Trojan subset of the malware designator. You’ll also start to see the outputs of our new analytics with fields like the CAL Proprietary Malware Family field shown above. As always, we’ll iterate and expand upon these insights and dashboards as we add more data and more horsepower to our engine. As an example, this unified view allows us to see things like the most prolific malware families across our customer instances within the last 90 days:

Note that a moderate number of SHADOWBROKERS samples are responsible for a disproportionate number of observations!

Why does this matter — With all of CAL’s analytical horsepower aligned, we’ve massively stepped up our offering on files. Without knowing anything about a file except for its hash, CAL can immediately deliver improved fidelity for metadata, reputation, and classification.

Stay tuned as we bring you more fresh cuts like these to help you focus your security operations. We’d love to hear more about what sorts of insights you’d like to see and how you’d like to use them!