Posted

If you have been a FAIR practitioner for years, like me, or maybe you are just starting out, you may have noticed (or will notice) that FAIR is great for understanding your financial risk exposure, however, it has its challenges. I’m not here to highlight everything, but the good does FAR outweigh the bad. No method for quantifying risk is perfect, and all have their own unique challenges. In this blog, I will highlight two of the largest challenges and how to address them.

Controls

FAIR highlights 4 main categories of controls, Avoidance, Deterrence, Resistive, and Responsive controls. However, the challenge is where do you apply them? Sure, training may provide a general mapping (Avoidance = Contact Frequency, Deterrence = Probability of Action, Resistive = Resistive Strength, Responsive = Loss Magnitude), but how a scenario is scoped and how a control is implemented is also extremely important as to where to apply them. This makes determining the Threat Event Frequency (TEF) and Vulnerability (VULN) difficult.

Let’s take DLP, for example. In general, one may think that a DLP control may only affect Secondary Loss Event Frequency (SLEF), but what happens if that control is implemented with Block All? This could actually affect the VULN calculation, as one could assume it would prevent an actor from being successful. Then on top of that, how do you account for all of your controls across your environment, as a lot of them come into play for the scenarios you are measuring? There are a lot of assumptions that still need to be made.

Don’t get me wrong, assumptions are not a bad thing, however, it still doesn’t make the application of controls any easier. The new FAIR-CAM (FAIR Controls Analytics Model) might help with that, but it looks complicated. I have only seen demonstrations of how it would work, but it still leaves me with a lot of questions.

The ideal state would be a solution that would automatically apply my controls for me.

Recommendations

Once you have identified and measured the scenarios of concern, how do you know what to do about them? Sure, you can perform “What-if” scenarios to determine if you made an improvement to a particular control and how it would reduce your risk, but how do you apply that in a holistic manner across your organization? This is cumbersome because it usually takes interviews with multiple Subject Matter Experts (or maybe only one if you get lucky and identify the right person) to determine the true effect of a control on your environment. After those conversations, you are still left with an assumption based on that SMEs experience. I have used this approach many dozens of times over the years, and it can work, but is it really defensible? Only if there was a way to automatically recommend improvements that remove some of the subjectivity from the equation

A Different Approach

I’m pleased to be working at ThreatConnect, a company that has taken a different approach to risk quantification which addresses these challenges and more. ThreatConnect Risk Quantifier has the capability of automatically applying controls holistically across your organization. ThreatConnect RQ’s Semi-Automated FAIR analyzes your attack surface area all the way down at the MITRE ATT&CK level so you can truly see the impact of your controls on your scenarios.

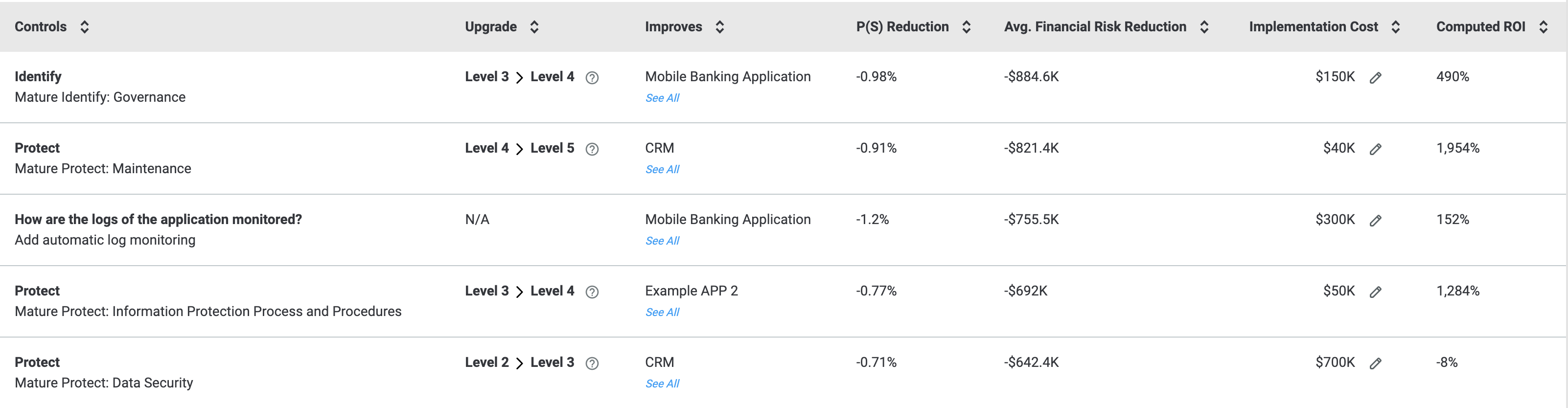

It also automatically recommends what controls should be improved to help mitigate the risk exposure. This allows you to spend time on having better conversations versus spending extra time on adjusting analyses.

Sound like something you’d want to try out and see in action? Check out our Free 14-day trial of the ThreatConnect Risk Quantifier for FAIR!