Posted

Planning for APIs as part of your security architecture

This is my first Playbook Friday blog post. I love the ones that the team creates and thought I would try my hand at one. That said, because I am not in the Platform as much as I’d like to be, I enlisted the help of one of our Sales Engineers. (Thanks for your help Ryan!)

In my last blog post, I spoke to the challenge of API’s not existing when we were getting started and how we had to wait out the market. API’s are now available across the security industry, which is very good news. The problem now is that the number of API’s used by security professionals and overhead of managing them is creating a new challenge within the security team.

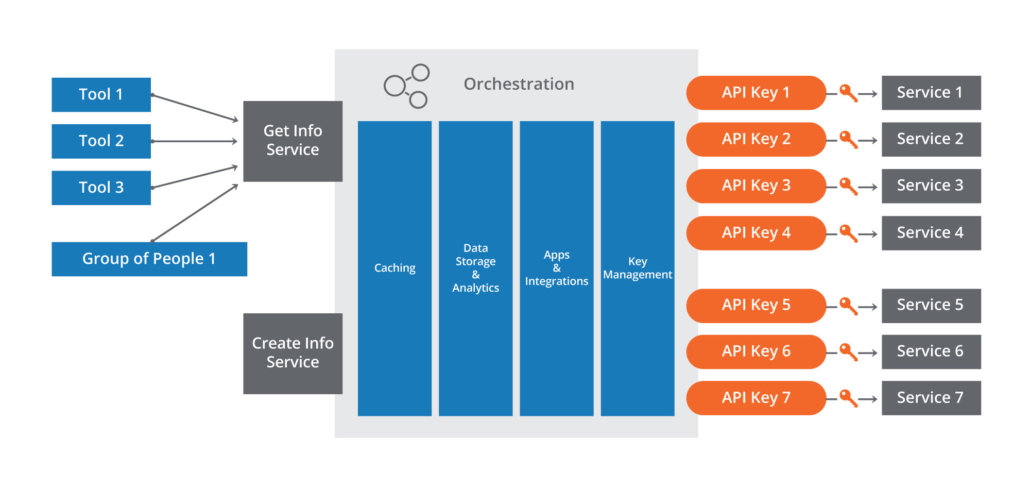

Many organizations have multiple tools, and analysts using the same product’s API’s. In some cases they have their own unique subscriptions, each user or connected tool having its own API account, or are sharing an enterprise subscription. Either way, every person and every connected tool may make use of the same API. For example, the SIEM enriches some data with a connection to a malware intelligence service (e.g. Hybrid Analysis, ReversingLabs, VirusTotal, etc) , then sends an alert to the IR platform which does the same lookups in the malware service, which then calls out to a Threat Intel Analytics and Orchestration platform and asks the same exact question. So, best case scenario is that you had 3 calls to the malware service service from 3 tools per interesting event in the SIEM. This is inefficient, wastes time, and introduces more potential points of failure.

Now remember, this one use-case is not the only use-case leveraging the API. Generally there will be people who run custom scripts, other tools outside of the ones above, or people that have been given the API keys and you don’t even know about that will employ the API. In other words, a single API may be used hundreds or thousands of times a day, depending on the use-cases and number of people and tools that have the key.

API Management

This is why API management became important in IT years ago with the success of Service Oriented Architecture (SOA). For those of you that have never heard of SOA or want to learn more, please take a look at http://serviceorientation.com/ from Arcitura Education. It provides a great deal of information on the topic.

In order to counter the problem stated above, and leverage principles of SOA and API management, the security organization needs to plan for API’s as part of their architecture. Following principles of SOA, the service should be built to answer a specific question – Let’s say “Tell me what I know about a file” and be managed as a resource that is independent of the underlying technologies and services behind it.

Benefits of API Management within the security organization:

- Reduce the risk of failure by reducing complexity across your API dependent systems

- Save resources in terms of building or configuring each of those API connections in separate tools and processes independently

- Through caching, conserve metered API calls for Services. In addition, conserving bandwidth and external network traffic across external service calls

- Decoupling internal users and systems from shared API key usage. Or you bought a service multiple times without knowing and can alleviate the expense of multiple subscriptions

- Analytics can be performed across API’s to measure their effectiveness as well as to provide insights into interesting use-cases across your enterprise

Now let’s get a little more detailed about how we are going to fulfill the needs of the service. I’m going to assume that the customer is using ThreatConnect and has the Playbooks capability which allows them to create a ThreatConnect Playbook and Playbook Components. Although I am using ThreatConnect for all aspects of this use-case, there may be reasons to invest in a dedicated API management technology. Products like CA Technologies (formerly Layer 7 Technologies), and Apigee are well known in this area. Also, most of your large cloud providers, Amazon, Microsoft, IBM, and Oracle have some type of API management capability as part of their offerings.

As an example, we will build an enterprise Malware Analysis Service, integrate the API with the underlying knowledge in ThreatConnect and in CAL™ as well as a third party’s malware service. Then we will add metrics collection across various aspects of the service, add a caching capability, and finally, configure a dashboard that highlights various aspects of the services usage.

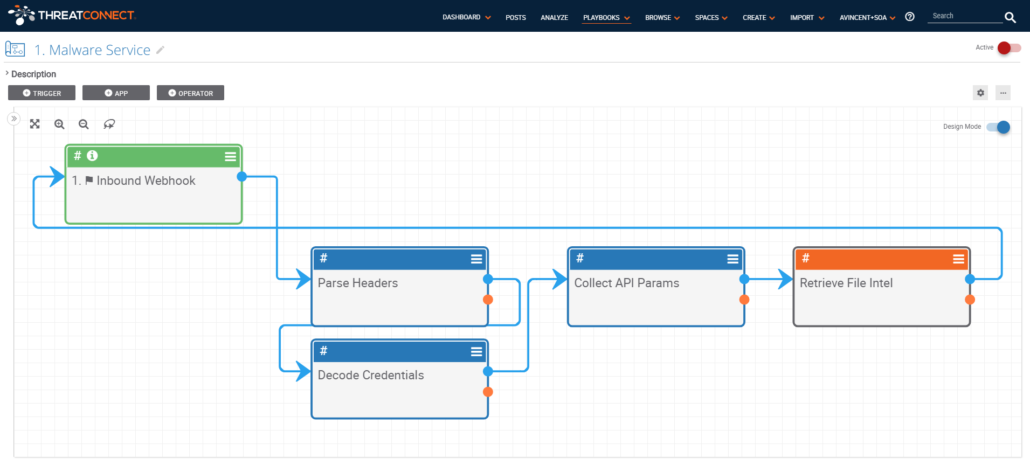

Step 1. Create “Malware Analysis Service” using a Playbook that receives an API query with HTTP Basic Authentication and a File Indicator as input. The HTTP Basic Authentication will be used to tie requests to people and tools across the organization.

Basic Flow:

- HTTP Query https://[Your ThreatConnect Hostname]/api/playbook/b2959a04-8de5-4f2f-94b0-c8890e98435

- Pull Username (HTTP Basic) and File Indicator from query and send to Retrieve File Intel Playbook Component

- Send JSON response from Retrieve File Intel to Inbound Webhook as HTTP Response

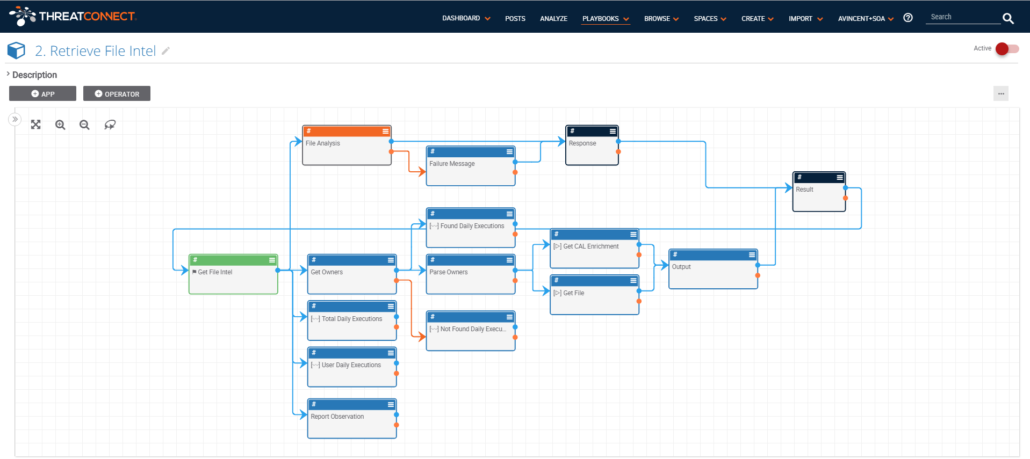

Step 2. Create a Playbook Component for “Retrieve File Intel” which collects knowledge across the ThreatConnect Platform as well as calls another Playbook Component that queries external services. The combined set of data is returned as JSON to the Malware Analysis Service Playbook.

Basic Flow:

- Receive username and file indicator as input

- Increment File Observation

- Run File Analysis Playbook Component

- Get all Intel from within ThreatConnect and CAL about file indicator

- Merge File Analysis response and all other intel collected and return JSON result to Malware Analysis Service Playbook

Metrics:

- Daily Executions – Total and By User

- Daily Executions – File Found

- Daily Executions – File Not Found

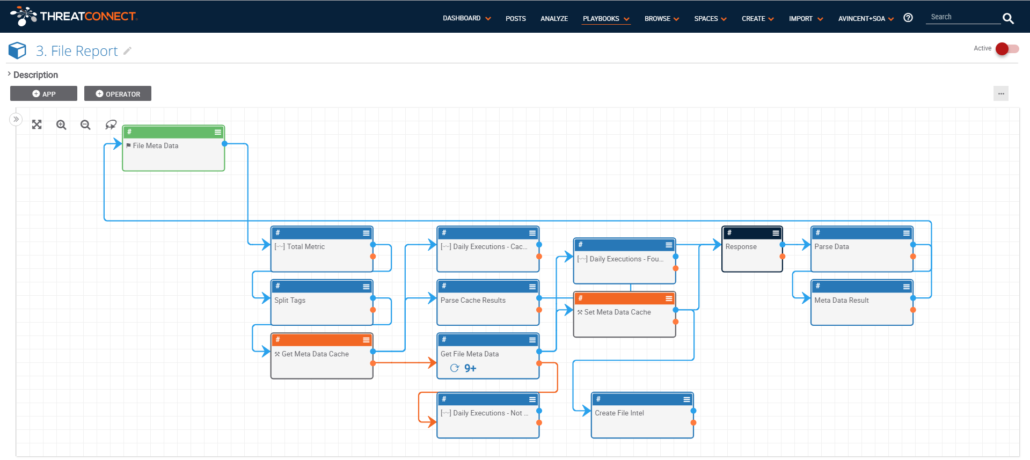

Step 3. Create a Playbook Component for “Retrieve File Report” which collects knowledge from one or more external services and returns to the Malware Analysis Service Playbook. Of particular interest is that this Playbook calls a caching component that returns the previous result if the cache is less than 30 days old.

Basic Flow:

- Receive username and file indicator as input

- Determine if file indicator is in cache (<30 days)

- If no cache hit then send query to file analysis and enrichment service and update ThreatConnect database and cache

- Return JSON response

Metrics:

- Daily Executions – Total

- Daily Executions – Cached

- Daily Executions – File Not Found

- Daily Executions – File Found

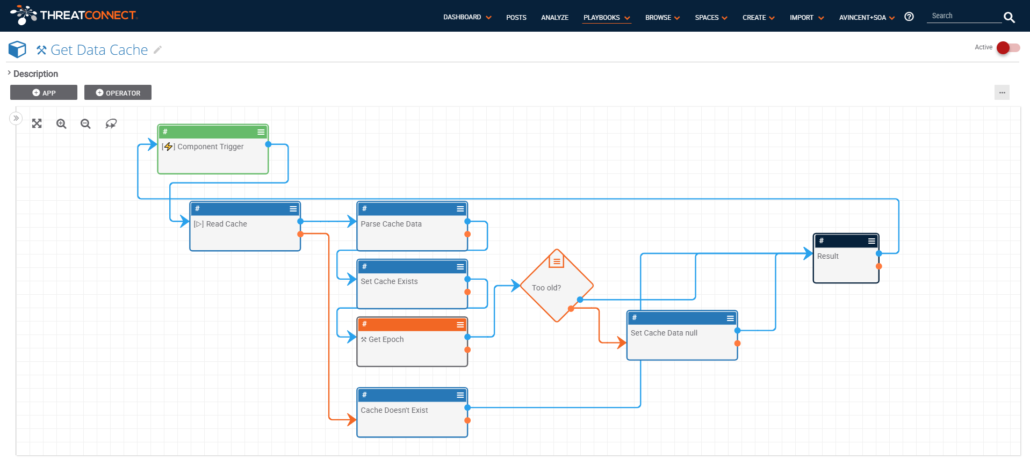

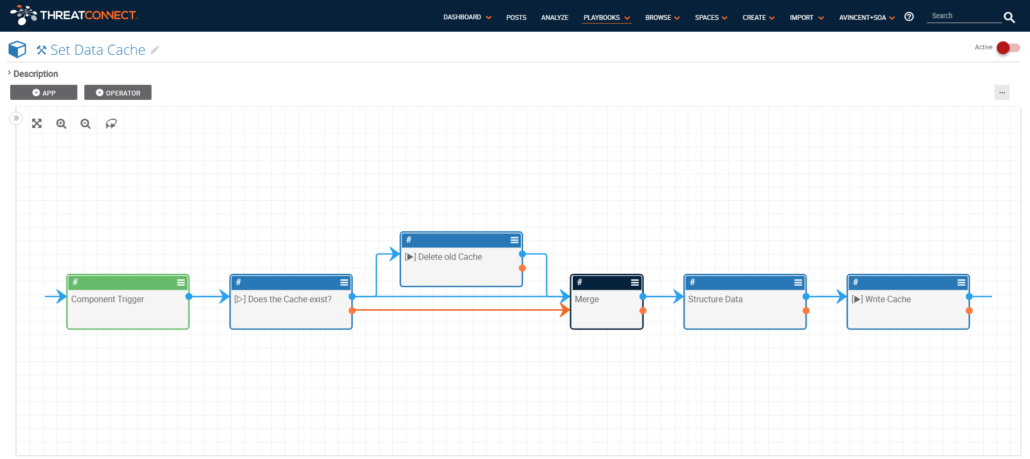

Step 4. Create Playbook Components for “Set Data Cache” and “Read Data Cache”. Both Components will be built using the ThreatConnect DataStore capability which supports CRUD (Create, Read, Update, Delete) operations to the ThreatConnect DataStore.

Basic Flow:

- Receive file indicator as input

- If exists in cache, get data and timestamp out of DataStore. Otherwise return null.

- Call Get Delta Epoch Component with cache max age as the delta. This will return the maximum age as an epoch.

- If Get Delta Epoch result is less than cache timestamp, data is still valid

Otherwise data is stale, return null.

Basic Flow:

Basic Flow:

- Receive JSON object of file indicator as input

- Does the data exist in the cache

- If exists delete it, and then set it with received data and current epoch timestamp.

- Doesn’t exist, then set it with received data and current epoch timestamp.

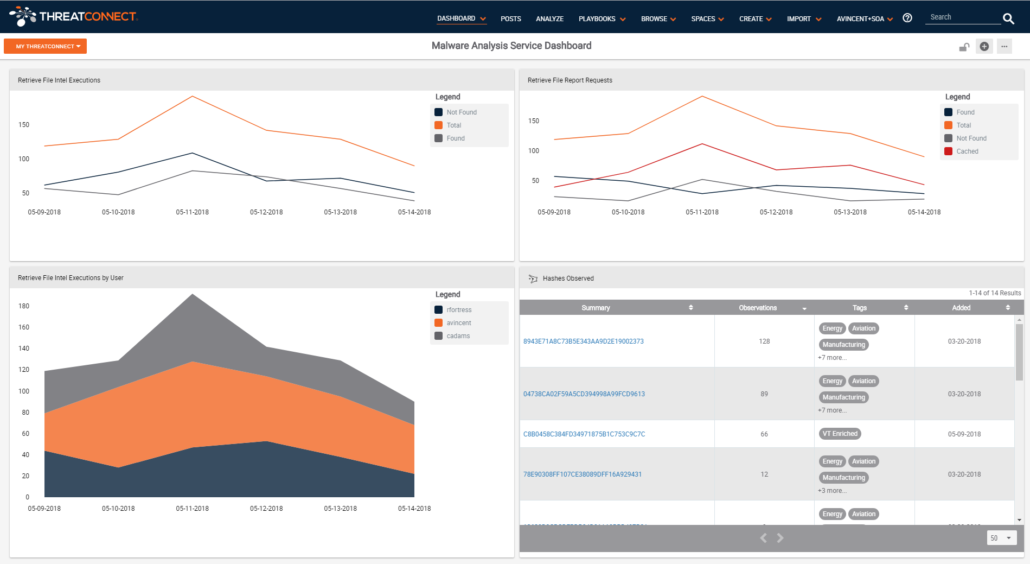

Step 5. Now that the capabilities of the service are dealt with, and API calls are being received from users, we can move to creating a dashboard to better monitor the services usage.

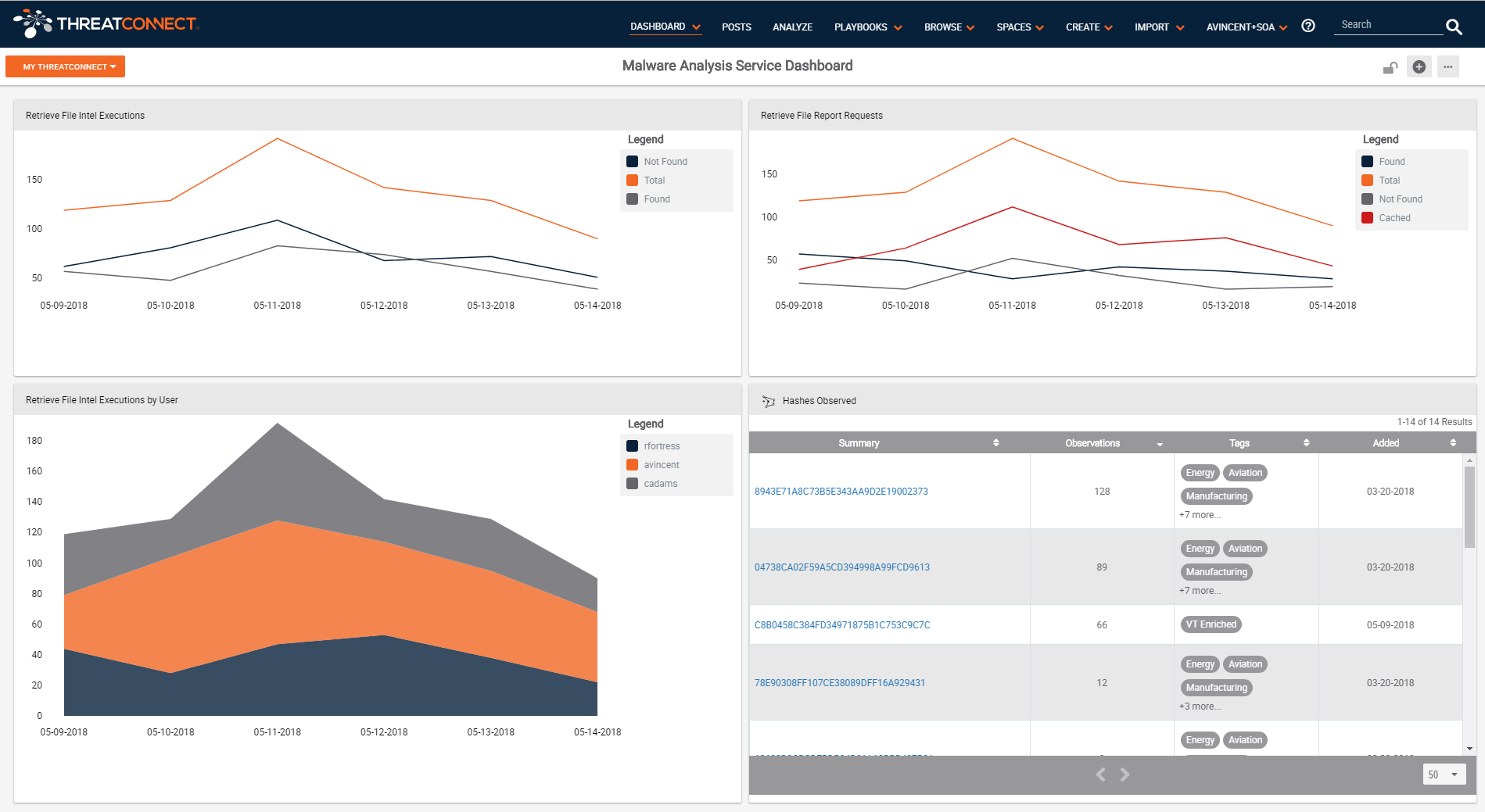

Above is a screen capture of a dashboard that allows us to quickly see how the Malware Analysis Service is being leveraged (Top left) and how the external enrichment services are being utilized as part of the service (Top Right). On the bottom left you can see who is using the Malware Analysis Service and which are heavy users. On the bottom right are the list of the most highly observed file indicators.

Looking at the top right dashboard card, and the red line specifically, you can see how effective your cache is relative to the services’ overall queries. As I said in the beginning, external services are often rate limited, or charged per query, so it is a good idea to measure the cache effectiveness and see how you can tweak it for better performance. This, coupled with an understanding of the per query cost savings, can be presented as one component of value-add from the service.

On June 5th at 10:30am, Ron Richie and Pat Opet from J.P. Morgan Chase & Co., and I are presenting at the Gartner Security and Risk Summit in the Potomac B, Ballroom Level. Our presentation is titled “Architecting For Speed: Building a Modern Cyber-Service-Oriented Architecture” and will describe a modern service-enabled stack, positioned for critical security decisions at speed.

If you like what you see here, check out the Playbooks and components repository here in GitHub.